www.SpeechTechnologyGroup.comvia http://www.speechtechnologygroup.com/speech-blog - We tend to forget that happiness doesn't come as a result of getting something we don't have, but rather of recognizing and appreciating what we do have. Happy Thanksgiving Gerd Graumann www.SpeechTechnologyGroup.com ...

Thursday, November 22, 2012

Thanksgiving Thoughts…

www.SpeechTechnologyGroup.comvia http://www.speechtechnologygroup.com/speech-blog - We tend to forget that happiness doesn't come as a result of getting something we don't have, but rather of recognizing and appreciating what we do have. Happy Thanksgiving Gerd Graumann www.SpeechTechnologyGroup.com ...

Job opening hints at new languages for Apple’s Siri - iPad/iPhone

New Apple job openings indicate that the company is working on bringing its Siri voice assistant to lots more languages, including Arabic, Danish, Dutch, Finnish, Norwegian and Swedish.

9To5Mac reports that the job listing, which was posed on Apple’s website on 134 November, is for a six month internship for candidates that can speak several languages that are not currently available for Siri.

The new employee would be part of “the team responsible for delivering Siri in different parts of the world for an exciting 6 month internship in Cupertino, California.”

At present, Siri is available in English, Spanish, French, German, Korean, Japanese, Mandarin, Cantonese, and Italian, across the US, UK, Australia, France, Germany, Japan, Canada, China, Hong Kong, Italy, Korea, Mexico, Spain, Switzerland and Taiwan.

Yesterday, we reported that Apple could be planning to bring Siri to Mac OS X 10.9 next year, and that the new operating system is rumoured to be called ‘Lynx’.

Tuesday, November 20, 2012

NAB uses voice recognition to authenticate banking customers - security, ATM, PIN codes, banking, biometrics, financial institutions, voice recognition, banks,

National Australia Bank (NAB) has deployed biometrics technology to allow customers to access their bank accounts using their voice.

Voice authentication is currently being used for calls to the NAB contact centre but could eventually be used for ATM machines, NAB executive general manager, Adam Bennett, told media at a lunch in Sydney.

NAB estimates the system saves users three minutes on the phone and reduces the likelihood of fraud. Rather than ask for a password or security questions, voice biometrics authenticates users by listening to their voice.

Ad - Clearly recognizing this growing trend, companies like speech technology group in San Diego or now offering premise andr cloud-based voice biometrics systems.

NAB first trialled voice biometrics in 2009 and has now rolled it out to 140,000 customers, Bennett said. The underlying technology was developed with Telstra, he said.

NAB is not the only bank exploring new methods of customer authentication. ANZ Bank has said it’s exploring using fingerprint-recognition technology to replace traditional PIN codes.

Voice biometrics is critical to NAB’s effort to prevent fraud, Bennett said, and has 120 security points, compared to 40 points on a fingerprint.

“We think it’s more robust than fingerprints,” he said.

“Our customers are increasingly interacting with voice platforms,” including in-car communications and Siri on the iPhone.

To prevent a malicious individual from using a recording to fraudulently access accounts, the NAB system asks a series of questions. “It’s extremely difficult to pre-record something that will answer the questions,” Bennett said.

Voice biometrics in ATMs is “something we can look at,” Bennett said. Call centres were an obvious first application because they rely on voice, “but we will continue to run experiments and we will continue to look at where is there a meaningful application for this type of technology,” he said.

It’s not decided what technology will eventually replace PIN codes at the ATM, said NAB group executive, Gavin Slater. “Everyone’s kind of looking at options, and I think it’s quite well into the future” when one authentication method becomes standard, he said.

Across the financial industry, “card not present is still the highest generator of fraudulent transactions,” Slater said. A card-not-present transaction is a payment in which a card is not physically scanned by a payment terminal.

Voice biometrics is just one example of industry investment addressing that issue, Slater said.

Thursday, November 15, 2012

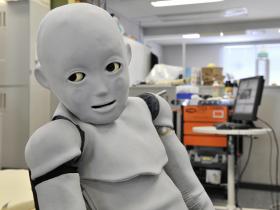

Embracing Your Inner Robot: A Singular Vision Of The Future

Originally published on Wed November 14, 2012 5:13 pm

Last week I went to a lecture by the inventor and futurist author Ray Kurzweil, who was visiting Dartmouth College for a couple of days. Kurzweil became famous for his music synthesizers and his text-to-speech software, which are of great help to those who can’t read or are blind. Stevie Wonder was one of his first customers. His main take, that the exponential advance in information and computer technology will deeply transform society and the meaning of being human, resonates with many people and scares a bunch more.

Using his exponential curve for processing-power-per-dollar increase, Kurzweil estimates that by 2045 we will reach the “Singularity,” a point of no return where people and machine will reach a deep level of integration. You can watch Kurzweil walk through his ideas at bigthink.com. Here’s a sample posted to YouTube:

For those who can afford it — and that’s a whole topic of discussion by itself: what will happen to those who can’t? — life will be something very different. Lifespan will be enormously extended, death will become an affliction and not an inevitability. I guess only taxes will remain a certainty!

Are such scenarios sci-fi or the reality of the future?

Take synthetic biology, for example, the ability to reprogram the genes of existing creatures to make them do what we want them to do. We might have bacteria create electricity and clean water from waste, produce blood, vaccines, fuels or whatever we fancy. We could recreate specific bodily organs to replace those that are malfunctioning, using your own DNA; boost your immunity against effectively anything; enhance intelligence and memory.

Advances in the speed with which we read genomes have been so dramatic that we now talk about using DNA as a storage device. After all, DNA encodes information in very clear ways and we could manipulate it to encode any information we want. As George Church and Ed Regis write in their thought-provoking recent book Regenesis: How Synthetic Biology Will Reinvent Nature , in principle we could store the whole of Wikipedia (in all languages) on a chip the size of a cell, for a cost of $1 for 100,000 copies.

Human-machine integration, in fact, is already happening at many levels: most people feel lost without their cell phone, as if a part of their body or self is missing.

According to Kurzweil, by 2029 computers will be powerful enough to simulate the human brain. From his “Law of Accelerated Returns” he estimates that in 25 years we will have technologies billions of times more powerful than we have today. Just think that five years ago social media — today a transformative force in the world — was practically inexistent, or that the biggest computers in the 1970s were a million-times more expensive and a thousand-times less efficient than the chips we have in our smartphones, representing a billion-fold increase in computing efficiency per dollar.

Singularity, in the case of black hole physics (Kurzweil’s inspiration for the term), represents a breakdown point, where the laws of physics as we currently understand them stop making sense. This doesn’t mean that there is an a priori reason for us not to understand the physics near the singularity, but that we currently don’t have the theoretical tools to do so.

In the case of artificial intelligence plus synthetic biology, the final alliance between humans and bio-informatics technology, it’s much harder to predict what could happen.

Every new technology can be applied for good or for evil (or both). If, as Kurzweil, we take an optimistic view and see how humanity has benefited from technology as a harbinger of things to come (we live longer and better, and although we kill more efficiently, we kill less), the Singularity will bring a new stage in the history of evolution, prompted by one of its creations: us.

The body will be superfluous, since we are, in essence, coiled information, a blueprint, a sequence of instructions that can be duplicated at will. Will you then become a memory stick that can be inserted into a machine that can make copies of you? Or, perhaps, we will become a super-evolved character in a video game, an intelligent Sims person, convinced that the virtual reality surrounding us is real. After all, to simulate reality in ways identical to what we perceive is simply a matter of data gathering, advanced software, and processing power, all promises of this upcoming future.

If this is what’s next, and odds are it is, we should start thinking of the consequences springing out of humanity’s redefinition. For one thing, it’ll be important to make sure copies of yourself are backed up in a safe location. You never know when an evil mind might decide to delete you from existence! That’s right, in this new future we won’t die anymore; we will be deleted. #enf

You can keep up with more of what Marcelo is thinking on Facebook and Twitter: @mgleiser

9(MDAyNTQ1NzQ1MDEyMjk0OTcxNTI4MzljZQ001))

Google TV Makes Voice Controls Look Useful

Google has stepped into the future of television with its new voice recognition feature, a seemingly gimmicky addition, which Google manages to make look pretty useful. In the promotional video below Google shows us how we might use this voice control software and it looks like the kind of thing we would want out of our TV—if it actually works.

- Our Google TV user doesn’t have to remember what channel a show is on: He can just speak the program into the remote and it shows up.

- If he doesn’t know the name of the program, describing the show to his remote, the television does its smart thing and helps him find his program.

- After asking him to teach him something, the TV surfaces what looks like a how-to YouTube video.

It’s more than a speaky version of a remote control, it makes finding things easier—or so it seems.

A main consumer worry with voice recognition is functionality, found a recent survey. “There is distrust towards commanding your home environment with voice; the users doubt especially its functionality,” write the authors. Siri, for example, lost favor with the masses for not knowing things. (The promotions for the bot, in a sly way, led us to believe otherwise, too.)

At this point Google has us intrigued. It will win us over once widespread consumer use puts it to the test. For now talking to a TV remains one of those bells or whistles that television makers are fastening onto new sets but aren’t being widely used, like 3D compatibility. (A Samsung spokesperson basically admitted that voice controls are mostly a marketing gimmick to Bloomberg Businessweek’s Cliff Edwards.) It’s still not clear it’s a feature anyone really wants. A recent survey of iPhone 4S owners found that only 37 percent of people would welcome Siri onto their TVs and 20 percent said they didn’t want the talking bot on their sets at all. But even with this reluctance on the consumer end, with voice controls making a notable appearance at this year’s Consumer Electronic’s Show, it’s no surprise that Google has followed Samsung and Microsoft with its own version. The surprise is that Google makes it look like something we might want to use.

Thursday, November 8, 2012

Select your localized edition:

Software turns English into synthesized Chinese almost instantly.

Software that instantly translates spoken speech could make communicating across language barriers far easier.

It could be the next best thing to learning a new language. Microsoft researchers have demonstrated software that translates spoken English into spoken Chinese almost instantly, while preserving the unique cadence of the speaker’s voice—a trick that could make conversation more effective and personal.

The first public demonstration was made by Rick Rashid, Microsoft’s chief research officer, on October 25 at an event in Tianjin, China. “I’m speaking in English and you’ll hear my words in Chinese in my own voice,” Rashid told the audience. The system works by recognizing a person’s words, quickly converting the text into properly ordered Chinese sentences, and then handing those over to speech synthesis software that has been trained to replicate the speaker’s voice.

Video recorded by audience members has been circulating on Chinese social media sites since the demonstration. Rashid presented the demonstration to an English-speaking audience in a blog post today that includes a video.

Microsoft first demonstrated technology that modifies synthesized speech to match a person’s voice earlier this year (see “Software Translates Your Voice Into Another Language”). But this system was only able to speak typed text. The software requires about an hour of training to be able to synthesize speech in a person’s voice, which it does by tweaking a stock text-to-speech model so it makes certain sounds in the same way the speaker does.

AT&T has previously shown a live translation system for Spanish and English (see “AT&T Wants to Put Your Voice in Charge of Apps”), and Google is known to have built its own experimental live translators. However, the prototypes developed by these companies do not have the ability to make synthesized speech match the sound of a person’s voice.

The Microsoft system is a demonstration of the company’s latest speech-recognition technology, which is based on learning software modeled on how networks of brain cells operate. In a blog post about the demonstration system, Rashid says that switching to that technology has allowed for the most significant jump in recognition accuracy in decades. “Rather than having one word in four or five incorrect, now the error rate is one word in seven or eight,” he wrote.

Microsoft is not alone in looking to neural networks to improve speech recognition. Google recently began using its own neural network-based technology in its voice recognition apps and services (see “Google Puts Its Virtual Brain Technology to Work”). Adopting this approach delivered between a 20 and a 25 percent improvement in word error rates, Google’s engineers say.

Rashid told MIT Technology Review by e-mail that he and the researchers at Microsoft Research Asia, in Beijing, have not yet used the system to have a conversation with anyone outside the company, but the public demonstration has provoked strong interest.

“What I’ve seen is some combination of excitement, astonishment, and optimism about the future that the technology could bring,” he says.

Rashid says the system is far from perfect, but notes that it is good enough to allow communication where none would otherwise be possible. Engineers working on the neural network-based approach at Microsoft and Google are optimistic they can wring much more power out of the technique, since it is only just being deployed.

“We don’t yet know the limits on accuracy of this technology—it is really too new,” says Rashid. “As we continue to ’train’ the system with more data, it appears to do better and better.”

Monday, November 5, 2012

Will Nuance’s Nina Do What Apple’s Siri Won’t?

Apple’s Siri iPhone voice-based App interface has forever changed consumer expectations of how to interact with their computing devices. But Nuance’s Nina may represent an even bigger transformation—the consumerization of IT. Nuance has over 10,000 employees, $1.4 billion in revenue in FY ‘11, $7.65 billion market cap company, headquartered in Burlington, Massachusetts and is best known for its Dragon Naturally Speaking voice recognition software. They just might be the biggest, most successful company you never heard of before. They describe themselves as “focused on developing the most human, natural intuitive ways to use your voice to take command of information.”

Siri is cool. But Nina may represent a true leap forward in man-machine learning and artificial intelligence. I recently spoke with Gary Clayton, Nuance’s Chief Creative Officer about his role in bringing Nina to life and his thoughts on how Nina is already bringing a welcome change into how businesses put the tool he helped to create to work to better serve their customers. He’s the guy responsible for turning some of the world’s most sophisticated software algorithms and artificial intelligence into engaging and user-friendly interfaces. He also oversees innovation, strategy and design at Nuance. “I wear a lot of hats,” said the understated Clayton.

The major innovation behind Nina is its capability to retain context over time. People can interact with Nina, the virtual assistant for customer service apps, and carry on a complex set of instructions within the same conversation flow. Its artificial intelligence learns and anticipates the user’s interests and requests over time—using natural language understanding. For example: a person can ask Nina what their checking account balance is, then a person can ask Nina to show them the charges over $200 and then for the month of August, or one could go through the bill paying process by simply stating “I would like to pay the balance on my cable bill on Friday from my savings account.” Humans communicate through context, not through complex, detailed step-by-step instructions that have always been the hallmark of human to computer interaction.

Imagine calling your insurance company and having a pleasant and successful interaction with an always friendly voice. No more yelling and swearing into the phone “Operator”!! Nina can also interact across devices and applications, so that customers can choose to connect by voice, mobile device or web page or any combination and still retain the context of the interaction. In fact, one such enlightened financial services company USAA, is implementing Nina to create a better customer experience. “USAA is extraordinarily responsive to their customers; one of the very best in their field and represent a gold standard in managing the customer experience,” said Clayton.

“People like to anthropomorphize technology,” stated Clayton. He knows it’s a basic human need to understand and control the world around us. Nina is one expression of meeting that need. That’s what drives Clayton in what he calls his never-ending quest to understand the creativity behind science and art. He started his quest as a physics undergrad at SUNY and later ventured to San Francisco for interdisciplinary studies and eventually earned his BA in communication from San Francisco StateUniversity. He sees creativity as the synthesis of art and science.

This led to a fascinating career path that began with the explosion of Silicon Valley technology drawing the film business toNorthern California. There, Francis Ford Coppola, George Lucas and others set up shop. Clayton worked with all of them but most notably Lucas and his Skywalker Ranch studios inMarin County,California, where he engineered sound recordings, which included the first recordings at Skywalker Sound with the San Francisco Ballet Orchestra. He founded and ran his own multi-media production company from 1985 to 2000 and worked on many Academy Award winning films, Grammy winning albums and Emmy winning TV shows. There he worked on projects with Michael Jackson, Dave Brubeck, The Cure, Brian Eno, David Bowie, Mel Torme, Sam Shepard, David Byrne, Norman Mailer, Apple Computer (Knowledge Navigator,Newton) and many others. After a succession of consulting projects at Pacific Bell and a start-up gig at TellMe, (acquired by Microsoft for a reported $800 million in 2007) he spent time at Yahoo where he headed up their speech strategy. From there he landed at Nuance in 2008. Clayton is the owner of eight patents and is considered one of the leaders in the digital speech recognition movement.

As one of the key developers of the Dragon Go! and Nina product lines, he is helping to push Nuance into the forefront of turning mobile device personal assistants into personal advisers. His vision of the synthesis of art and science may be a never-ending process, but his work on Nina just may be the full fruition of a lifetime of trying.

Apple’s ‘Mr Fix It’ Eddy Cue profiled - Apple Business

Eddy Cue, the Apple exec who is now tasked with fixing Apple’s voice-recognition PA Siri and its disastrous iOS 6 Maps app, has been profiled in a number of articles.

Cue, given the nickname ‘Mr Fix-It’ in the Cnet report (although perhaps in the UK that name has slightly less positive connotations), is “a longtime Apple executive who led its transformation into the world’s largest music retailer,” writes Business Insider.

As the ‘Mr Fix-It’ of Apple, senior vice president of internet software and services, Eddy Cue has a number of successes to his name, according to the Business Insider report.

Cue built Apple’s online store, “a big move at the time,” according to the report, which “gave Apple a foundation in e-commerce that Jobs later credited as crucial for creating iTunes.”

Cue also built the iTunes Store, striking deals with “wary record labels” as a ” door opener with entertainment companies” and “turned Apple into the largest music retailer in the world”. The reports note a number of occasions where Cue negotiated “heated discussions” with labels, and managed to maintain Apple’s top position in music. Cnet suggests that Cue “often played the good cop to Steve Jobs’ bad cop,” when dealing with the labels. Although, according to the report, music executives said: “Eddy did a lot of bad cop, too.”

Cnet claims that five years ago, Cue helped prevent the relationship between Apple and the large record companies from collapsing when the sides almost ‘went nuclear’.

After the success of iTunes, Cue went on to oversee the App Store. Looking to the future, it is likely that Cue is busily negotiating deals to get content for the fabled Apple television.

Cue’s ‘Mr Fix It’ title comes from the fact that he was tasked with fixing MobileMe after it flopped. That service was eventually replaced by iCloud.

“Cue’s got a tough job. The good news for Apple is that he’s done tough jobs before,” notes Business Insider.

Cnet includes a number of comments about Cue from various sources.

CEO of Major League Baseball Advanced Media Bob Bowman said: “There’s nobody quite like Eddy Cue at Android or any of the other competitors. Eddy is genius, brilliant, thoughtful, and tough.”

Music-tech attorney Chris Castle said: “My impression was that he was very clearly about Apple’s interest, but it was clear he also wanted to be fair. Apple never tried to steal music like many of these other guys. They cared about content. It was never about what they could get away with. With Eddy you felt you had a fair hearing.”

Regarding his negations with record labels one music industry exec said: “You have a point in time when everyone was going to draw their weapons, but the extremely shrewd way Eddy handled it took all the fight out of the industry’s sword rattlers.”

One reason for his negotiating successes may be his ” calm demeanor “, note one source. “I really respected [Cue] and his thoughtful, calm demeanor. Unlike a lot of tech people he listened to us when we were doing his deals.”

Another executive told Cnet: “Eddy doesn’t care about those other guys, the flashy executives who want the spotlight. He’s the kind of person who is happy to be in the engine room making sure that everything is clicking along.”.

Meet the comic who doesn’t say a word

Different kind of funny: comedian Patrick Monahan says “Lee is amazing! He’s so fresh, unique and very very funny! We are all doing comedy in 3D, Lee is doing it in 4D!” Picture: lostvoiceguy.com5483391077003737

AT first the laughs are, perhaps understandably, nervous. But then Lee Ridley is far from a conventional stand-up comic.

For a start, he doesn’t utter a single word on stage.

Lee, who has cerebral palsy, cannot speak so he uses a text-to-speech iPad app to deliver his lines. During his show, Lost Voice Guy, lines are delivered in the synthetic tones of a computer-generated male voice.

“When I realised I’d never be able to talk again, I was speechless,” he jokes, to chuckles from the audience.

In a recent stunt at the UK’s X Factor auditions, he used an iPad to deliver a rendition of R Kelly’s “I Believe I Can Fly”. Unfortunately, the judges didn’t see the funny side, and he was cut short after a few verses.

Still, the experience has made ripe material for his act: “I used to be in a disabled Steps tribute band. We were called Ramps. We faced an uphill struggle.” At this the audience erupts with laughter.

Over the weekend, Lee was in London for a gig in Covent Garden. Our interview is conducted via a specialised computer called a Lightwriter rather than the iPad app, which is “more fun for the show but harder to type on - this is what I use in everyday life”, says Lee.

The Lightwriter is portable and has two screens so I can see the words as Lee writes them. I ask a question and after a few taps of the keypad, the computer voice replies.

He says: “I’ve always loved stand-up but I never thought about trying it myself until friends suggested that it might work and that I’d be unique.

“I’m comfortable making fun of myself. People don’t expect it and there’s that awkward feeling in the room initially when I get on stage. But when I’m funny that goes away.”

Lee’s first gig was at a friend’s comedy night in Sunderland in February and he has been overwhelmed at the positive response so far - he recently supported Ross Noble on tour while “Little Britain” star Matt Lucas is a fan. Now he is planning a tour of his own next year.

“I was very nervous. I thought no one would understand me,” explains Lee. “After a few minutes of it going well I started to enjoy it. It was a massive buzz knowing people were laughing at stuff I’d written. I managed only two hours’ sleep afterwards because I was on such a high.”

Cerebral palsy is an umbrella term covering a range of neurological conditions that affect movement, co-ordination and speech. It is caused by damage to the brain that can happen during pregnancy, birth or soon after.

Aged six months, Lee contracted the brain infection encephalitis - triggered by a cold sore - which put him in a coma for two weeks. It left him with hemiplagia, a common type of cerebral palsy.

Lee’s right side is much weaker than his left, which means he walks with a limp. He finds it hard to swallow and the muscles in his mouth and tongue are too weak for him to speak - despite years of working with a speech therapist in early childhood.

About three-quarters of people with cerebral palsy suffer from some sort of speech difficulty. Lee, who grew up in Co Durham in England, attended a specialist primary school where he learned sign language.

Aged 12, he was given his purpose-built Lightwriter. Despite its American accented voice, you can still detect a hint of Geordie in his syntax and with words such as Mam, which he spells phonetically.

“I take the Lightwriter for granted now but it changed my life and made me a lot more independent,’ says Lee, who works at Newcastle City Council’s press office.

“It’s frustrating that I can’t just instantly say something, that I have to type it out first - although if I’m angry that can be a good thing.”

Despite his disability, teachers at his primary school realised Lee was very bright and needed to be challenged. When he was old enough, he was able to attend many lessons at a mainstream secondary school and he went on to gain a place at the University of Central Lancashire to study journalism.

“Luckily I had a really good English teacher who pushed me to my limits. I’ve always loved writing and English and until now journalism was all I ever wanted to do,’ says Lee, who has had stints as a sports reporter for his local newspaper and the BBC. “I always seem to choose strange careers for someone who can’t speak.”

He is able to do most of his research via email but uses his Lightwriter device to conduct interviews over the phone.

“Some people just assume I’m an answering machine or get impatient but I’m used to it and it’s turned into great comedy material,’ he says.

Friday, November 2, 2012

Research at Google - Google+ - Large Scale Language Modeling in Automatic Speech…

At Google, we’re able to use the large amounts of data made available by the Web’s fast growth. Two such data sources are the anonymized queries on google.com and the web itself. They help improve automatic speech recognition through large language models: Voice Search makes use of the former, whereas YouTube speech transcription benefits significantly from the latter. The language model is the component of a speech recognizer that assigns a probability to the next word in a sentence given the previous ones. As an example, if the previous words are “new york”, the model would assign a higher probability to “pizza” than say “granola”. The n-gram approach to language modeling (predicting the next word based on the previous n-1 words) is particularly well-suited to such large amounts of data: it scales gracefully, and the non-parametric nature of the model allows it to grow with more data. For example, on Voice Search we were able to train and evaluate 5-gram language models consisting of 12 billion n-grams, built using large vocabularies (1 million words), and trained on as many as 230 billion words. The computational effort pays off, as highlighted by the plot below: both word error rate (a measure of speech recognition accuracy) and search error rate (a metric we use to evaluate the output of the speech recognition system when used in a search engine) decrease significantly with larger language models.

http://goo.gl/GqHOs: A more detailed summary of results on Voice Search and a few YouTube speech transcription tasks, written by +Ciprian Chelba, +Dan Bikel, +Masha Shugrina, +Patrick Nguyen and Shankar Kumar (http://goo.gl/QhQCl), presents our results when increasing both the amount of training data, and the size of the language model estimated from such data. Depending on the task, availability and amount of training data used, as well as language model size and the performance of the underlying speech recognizer, we observe reductions in word error rate between 6% and 10% relative, for systems on a wide range of operating points.

Thursday, November 1, 2012

Cars With Social Media Plugins - Social News Daily

While some drivers have said that they don’t want social media like Facebook and Twitter in their cars, car manufacturers are touting the new technology in the dashboards of their cars.

Apple’s Siri technology is already being considered in BMW, Audi, Chrysler, Honda, General Motors, Jaguar, Land Rover, and Toyota cars, but this differs from five vehicles who have social media built into the dashboard.

Social Media Today notes that the five cars are the Honda Accord, Ford Evos, Mercedes Benz, Cadillac, and Toyota Entune.

The Honda Accord features a social media connection through a touch screen panel on the center of the dashboard. While the vehicle’s commercial advertises the ability to text while on-the-go, the touch screen also provides the foundation for more involved plugins.

While the Ford Evos is not yet in production, the concept has been imagined as the socially networked car for the future. The car comes with plugins that will allow drivers to like, share, and check in while on the go. It is being advertised as a next-generation car for the next generation.

Luxury car maker Mercedes Benz is planning to add a telematic system to its cars. They claim that the new system will let drivers interact with Facebook, Yelp, and other social media services while they are at the wheel. The system’s first car will be the SLK Roadster — likely a move to court younger buyers.

Cadilac and most other GM cars already have an OnStar system. But the system will be bringing a version of social media into the vehicles. They will allow drivers to access Facebook and will even have their Twitter feeds read aloud to them by the OnStar system.

Toyota has introduced their Entune system, which includes a mass of social media apps. These apps include a friend-finding function to notify drivers when their friends are in the same area. The system is geared toward younger, more social buyers. They plan to roll out Toyota Entune in the next couple of years.

While the idea of a social media car has fantastic potential for technological progress, the functionality could enable cars to be more interactive, they could also be disastrous if the driver is constantly distracted. Considering the federal government has already spent billions trying to get drivers to stop texting and talking on their cell phones while driving, it could be very disastrous to have a car you can text in, post status updates, and check in while driving.

Is Google’s New ‘Siri Killer’ Deadly Enough To Take Her Out?

Siri is a wanted woman. And Google’s on her trail.

For the last year, Apple’s iPhone app has been sucking up all the oxygen regarding voice-controlled digital assistants. Now Uncle Googs is striking back with a sassy-voiced companion of its own for iPhones. And according to the search giant, their assistant has the advantage of knowing what Google knows… basically everything.

A new web video brags that you can ask sophisticated queries like “What does Yankee Stadium look like” and get a page full of photos back. Siri, by comparison, will show you a map and driving directions. Or try “how many people live in Cape Cod” and Google’s technology will read you the answer. Ironically, Siri sends you to a Google search page with the same information. You’ll have to read it yourself.

Asked “what’s a beluga whale,” both apps offered up a wiki like platter of data. Google read the top entry and showed links. Siri was silent but offered up a pretty comprehensive data sheet on the giant sea mammal.

When it comes to evil doing, Siri is the clear winner. Finding an escort service? Check. Hiding a dead body? Check. Ask Google’s app the same questions and it brings up Youtube videos showing people successfully using Siri. Ouch.

I also told Google’s search app that I wanted an abortion (Apple took heat last year for not enabling Siri to help. It’s since smartened up the app). But Google might need a few more IQ points to get this one right. It brought up a google search page offering a Huffington Post article (thanks for the traffic) on Republicans who want to criminalize abortion and several sites that explained the ins and outs of the procedure, but only the paid results offered a way to find a provider.

So who came out on top?

In my completely unscientific test run, Google’s search app seemed about as smart as Siri. Each shined in different scenarios. But if Google has any real ambitions of being a Siri killer, it better figure out where to hide a dead body.

HMM-based Speech Synthesis: Fundamentals and Its Recent Advances

The task of speech synthesis is to convert normal language text into speech. In recent years, hidden Markov model (HMM) has been successfully applied to acoustic modeling for speech synthesis, and HMM-based parametric speech synthesis has become a mainstream speech synthesis method. This method is able to synthesize highly intelligible and smooth speech sounds. Another significant advantage of this model-based parametric approach is that it makes speech synthesis far more flexible compared to the conventional unit selection and waveform concatenation approach.

This talk will first introduce the overall HMM synthesis system architecture developed at USTC. Then, some key techniques will be described, including the vocoder, acoustic modeling, parameter generation algorithm, MSD-HMM for F0 modeling, context-dependent model training, etc. Our method will be compared with the unit selection approach and its flexibility in controlling voice characteristics will also be presented.

The second part of this talk will describe some recent advances of HMM-based speech synthesis at the USTC speech group. The methods to be described include: 1) articulatory control of HMM-based speech synthesis, which further improves the flexibility of HMM-based speech synthesis by integrating phonetic knowledge, 2) LPS-GV and minimum KLD based parameter generation, which alleviates the over-smoothing of generated spectral features and improves the naturalness of synthetic speech, and 3) hybrid HMM-based/unit-selection approach which achieves excellent performance in the Blizzard Challenge speech synthesis evaluation events of recent years.

Google explains how more data means better speech recognition — Data

A new research paper from Google highlights the importance of big data in creating consumer-friendly services such as voice search on smartphones. More data helps train smarter models, which can then better predict what someone say next — letting you keep your eyes on the road.

photo: Shutterstock / watcharakun

photo: Shutterstock / watcharakun A new research paper out of Google describes in some detail the data science behind the the company’s speech recognition applications, such as voice search and adding captions or tags to YouTube videos. And although the math might be beyond most people’s grasp, the concepts are not. The paper underscores why everyone is so excited about the prospect of “big data” and also how important it is to choose the right data set for the right job.

Google has always been a fan of the idea that more data is better, as exemplified by Research Director Peter Norvig’s stance that, generally speaking, more data trumps better algorithms (see, e.g., his 2009 paper titled “The Unreasonable Effectiveness of Data“). Although some hair-splitting does occur about the relative value (or lack thereof) of algorithms in Norvig’s assessment, it’s pretty much an accepted truth at this point and drives much of the discussion around big data. The more data your models have from which to learn, the more accurate they become — even if they weren’t cutting-edge stuff to begin with.

No surprise, then, it turns out that more data is also better for training speech-recognition systems. The researchers found that data sets and larger language models (here’s a Wikipedia explanation of the n-gram type involved in Google’s research) result in fewer errors predicting the next word based on the words that precede it. Discussing the research in a blog post on Wednesday, Google research scientist Ciprian Chelba gives the example that a good model will attribute a higher probability to “pizza” as the next word than to “granola” if the previous two words were “New York.” When it comes to voice search, his team found that “increasing the model size by two orders of magnitude reduces the [word error rate] by 10% relative.”

The real key, however — as any data scientist will tell you — is knowing what type of data is best to train your models, whatever they are. For the voice search tests, the Google researchers used 230 billion words that came from “a random sample of anonymized queries from google.com that did not trigger spelling correction.” However, because people speak and write prose differently than they type searches, the YouTube models were fed data from transcriptions of news broadcasts and large web crawls.

“As far as language modeling is concerned, the variety of topics and speaking styles makes a language model built from a web crawl a very attractive choice,” they write.

This research isn’t necessarily groundbreaking, but helps drive home the reasons that topics such as big data and data science get so much attention these days. As consumers demand ever smarter applications and more frictionless user experiences, every last piece of data and every decision about how to analyze it matters.

Smartpen automatically sends your notes and audio to the cloud

With touchscreen and text-to-speech rapidly taking over where handwriting left off, pens and pencils are slowly dying off as a means to log long notes. But for now, for short meeting notes and vital messages, writing remains our go-to method. Therefore, it only makes sense that, along with our smartphones, we now have a smartpen.

Although it looks like a normal pen, Livescribe’s Sky Wi-Fi Smartpen can remember everything you’ve written on paper or said during your note taking, and can actually replay the audio from the moment you wrote something when you tap that area on the page. In addition to local storage, notes and audio can also be wirelessly sent to your Evernote account for archiving.

Offered in 2GB, 4GB, and 8GB versions, the smartpen can hold up to 800 hours of audio and recharges using a micro-USB connection. You can check out a demonstration of how the smartpen is designed to work in the video below.

Dragon NaturallySpeaking 12 Premium review

Dragon NaturallySpeaking remains the number one speech-recognition software tool for dictation. But version 12 brings relatively little new to the party. Here’s our Dragon NaturallySpeaking 12 Premium review.

NaturallySpeaking 12 Premium continues Dragon’s reign as the king of all voice-recognition software. But it remains a premium product with a premium price. Whether this means that users of NaturallySpeaking 10 or 11 will be prepared to shell out to make the upgrade remains to be seen, but speech-recognition virgins need look no further. See all Software reviews.

Of course, speech-recognition is not for everyone, but with training, Dragon NaturallySpeaking 12 Premium translates accurately at great speed. That training is required, but once undertaken NaturallySpeaking is a world beyond cheaper and free speech-recogition apps. Indeed, if you’ve never used Dragon this tool will feel like science fiction. And for those who find typing difficult for any reason, this can be a boon. You also get a microphone headset in the boxed edition of Dragon NaturallySpeaking 12 Premium.

New features in Dragon NaturallySpeaking 12 Premium

For those who are tempted by the upgrade, there are a couple of new features to consider, in addition to the always claimed improvements in speed and accuracy (we’ll get on to both below, in the section entitled ‘Using Dragon NaturallySpeaking 12 Premium’). We’re not sure either are showstoppers, though: unless you are required to use voice commands to navigate your PC. You can now use Dragon to fully navigate Outlook.com and Gmail, with Dragon icons built into their interfaces.

Geographical addresses format correctly on the fly, too.

Training Dragon NaturallySpeaking 12 Premium

Nothing in life is easy. Well, using NaturallySpeaking is - but only once you’ve set it up, practised using it, and trained it to recognise your tones. This is not difficult, but it is time consuming. (I speak as one who once demonstrated this technology on live television without the requisite training time. Trust me: take the time.) Nuance provides plenty of tips and documentation. It’s straightforward and even fun. You have to read back text from the screen, texts that vary in difficulty, length, and content. There’s also a walk through of how to use the software.

Nothing in life is easy. Well, using NaturallySpeaking is - but only once you’ve set it up, practised using it, and trained it to recognise your tones. This is not difficult, but it is time consuming. (I speak as one who once demonstrated this technology on live television without the requisite training time. Trust me: take the time.) Nuance provides plenty of tips and documentation. It’s straightforward and even fun. You have to read back text from the screen, texts that vary in difficulty, length, and content. There’s also a walk through of how to use the software.

Installing from the disc on our Windows 7 laptop was simple and speedy. We spent about half an hour going through the training process before we started using Dragon NaturallySpeaking 12 Premium. This, reader, is because we have nothing better to do than review software. You may wish to get started immediately - rest assured that you can jump back into training your user profile at any point. At the very least before you get started you can choose your regional accent from an extensive list, and point the software toward your emails in order that it can investigate your syntax and vocabulary.

Using Dragon NaturallySpeaking 12 Premium

NaturallySpeaking remains a cinch to use. The interface is so simple as to be virtually un-noticeable - a critical factor in a supportive utility such as this. A grey toolbar sits at the top of your screen, and an optional sidebar on the righthand tide. The toolbar lets you know when the microphone is active and offers access to menu items including profile, tools, vocabulary, modes, audio and help.

Getting started with dication is simple - I’ve used this type of software before, so I offered a quick look at NaturallySpeaking to my wife. She picked it up straight away. With training the accuracy was nothing short of stunning. I have an odd hybrid Yorkshire/London accent, and a tendancy to mumble as a result of a facial operation many years ago. But I’d say that NaturallySpeaking was more than 90 percent accurate. More importantly, the basic editing tools that work with Word make it easy to rectify mistakes on the fly (saying ‘delete’ or ‘new paragraph’ does what you might expect it to). This is critical if NaturallySpeaking is to earn its corn as part of your productivity arsenal, but takes time to master.

Further voice commands will make Dragon more useful - again, getting the most from this investment requires effort. You can add punctuation by saying ‘full stop’, for instance. A Quick Reference Card offers a quick overview of voice commands to use when dictating text and when simply using Dragon to navigate the OS. Within Windows 7 and Windows 8 such voice commands are baked in to the accessability options, of course, but if you require voice commands it makes sense to use the same program to dictate as to navigate.

sound card supporting 16-bit recording

DVD-ROM drive for installation

- Ease of Use:

- Features:

- Value for Money:

- Performance:

- Overall:

This is the best speech-recognition dictation software there is. But it is a significant investment, both in terms of time and money. If you already have a recent version of NaturallySpeaking and a decent headset, the upgrade may not be such an attractive proposition. But for those looking for a tool via which they can dictate rather than type, this is the tool for you.

-

Nuance Dragon NaturallySpeaking 11 Premium

It’s taken two years for Nuance to update its market-leading voice recognition software Dragon NaturallySpeaking. We liked version 10, so what’s new in Dragon NaturallySpeaking 11 Premium?

-

Dragon NaturallySpeaking 9.0 Preferred

Voice-recognition software has come a long way in the past few years.

-

Dragon NaturallySpeaking

Voice-recognition software has come a long way since the days when it would understand only about one word in 50. Powerful processors and more sophisticated software mean that talking to your PC to create text onscreen is now a reality. And ScanSoft’s Dragon NaturallySpeaking software is among the best-known packages available.

-